In the beam pipes of the Large Hadron Collider unwanted electrons are generated by the circulating beams through different mechanisms. For example, the circulating protons emit photons, which can in turn stimulate the emission of electrons when they are absorbed by the chamber’s wall (photoelectric effect). These electrons are accelerated by the circulating proton bunches toward the opposite side of the beam chamber. Upon their impact on the surface, secondary electrons are emitted by the surface, which are also accelerated by the circulating beams and can generate more electrons. This triggers an avalanche multiplication process, that fills the beam pipe with a so-called “electron cloud” (e-cloud).

E-clouds can induce several effects that are detrimental for the performance of a particle accelerator, notably a degradation of the beam vacuum and a significant energy deposition on the beam pipe surface, which is particularly critical for devices operating at cryogenic temperatures.

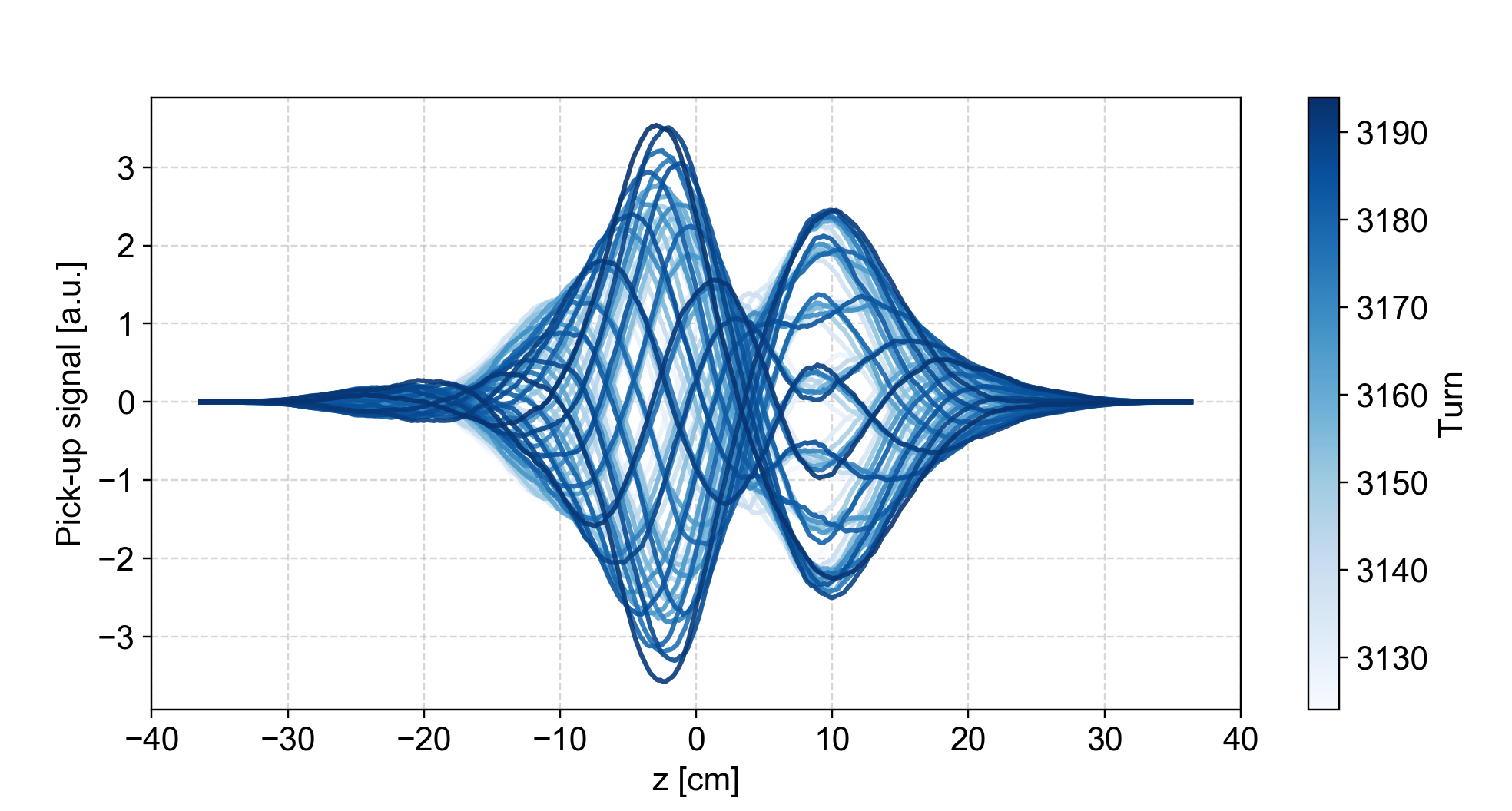

Moreover, the e-clouds in the beam pipe interact electromagnetically with the circulating proton beams affecting their dynamics. Particularly dangerous are transverse beam instabilities, which are collective oscillations of the proton beams that are amplified turn after turn by the e-cloud. This leads to a large increase in the transverse beam sizes and to severe particle losses. In some cases, these instabilities can be so violent that a beam abort needs to be triggered in order to protect the accelerator.

During an e-cloud driven instability the motion of the proton beam and the motion of the electrons in the beam pipe are strongly coupled by complex non-linear forces. These effects are difficult to describe with simplified mathematical models. Instead the understanding of these phenomena strongly relies on complex numerical simulations of the coupled beam-electron dynamics. At CERN, these are carried out using the PyECLOUD-PyHEADTAIL suite, a set of software tools developed and maintained by the Accelerator and Beam Physics group. The results of these simulations are used to prepare for future operation, in particular in the framework of the High-Luminosity LHC (HL-LHC) project.

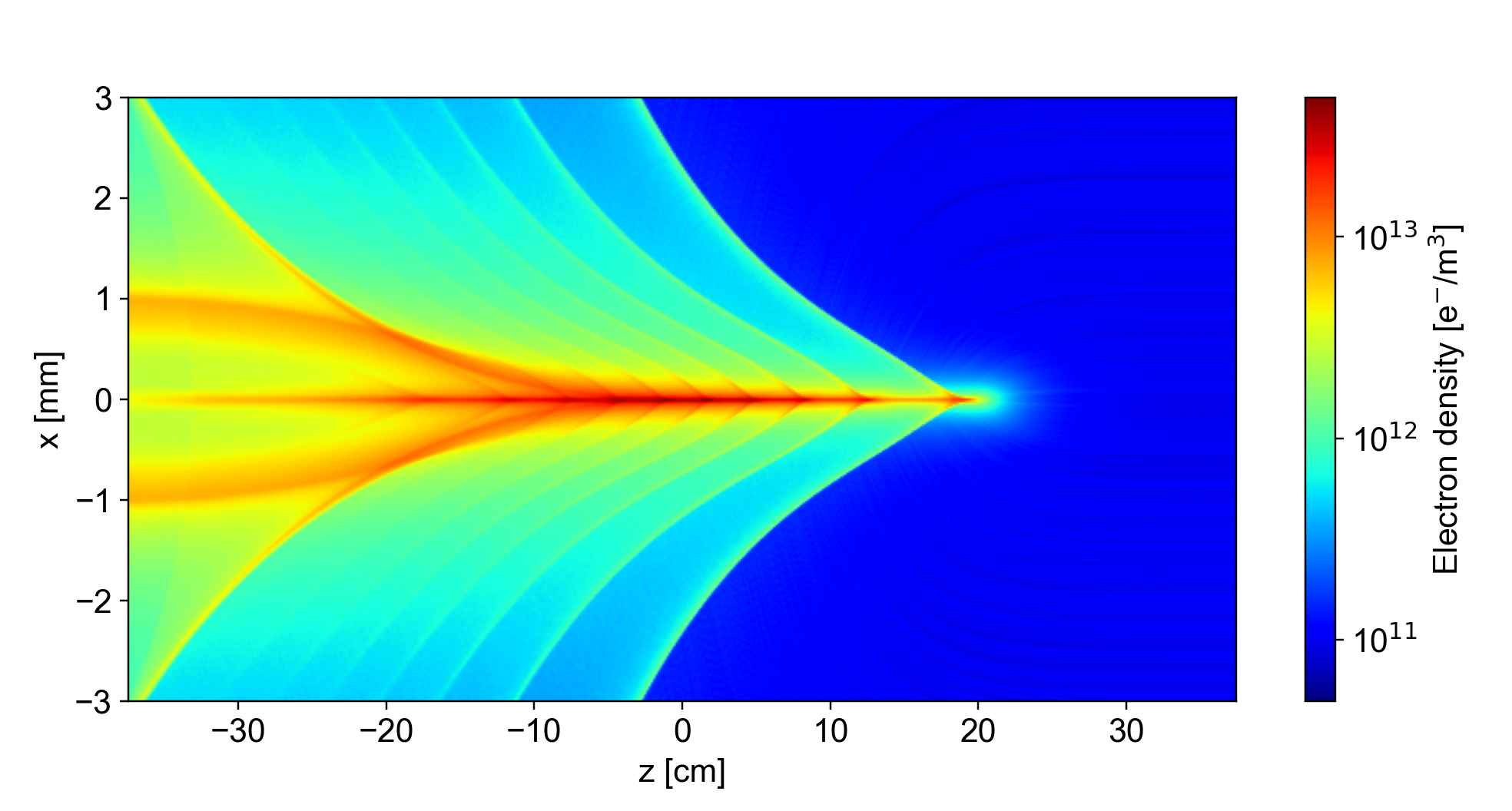

Characteristic waves of the e-cloud density inside a bunch (PyECLOUD-PyHEADTAIL simulation).

Different features make these simulations computationally very heavy. The necessity of resolving the non-linear forces exerted by the e-cloud within the beams, which can be as small as a fraction of a millimeter, imposes tight constraints on the grid size used to compute the electric forces. Moreover, as electrons are very light particles, they can move very fast across the chamber. For this reason, the discrete time-step used in the simulation needs to be very short (in the order of ten picoseconds) while the unstable motion of the beams becomes visible only after several seconds, as a result of the interaction with the e-cloud over several turns around the ring. Therefore, these simulations can require up to billions of discrete time-steps to reveal the unstable motion.

To perform the simulations within a reasonable time, it is necessary to resort to parallel computing techniques, which allow sharing the computational load over several microprocessors. The heaviest e-cloud simulations made at CERN so far required in the order of 1200 CPU-cores for a single simulation.

High-speed communication channels need to be available to the CPUs to communicate among themselves, in order to effectively “collaborate” and carry out a large simulation. The beam physics team exploits three High-Performance Computing (HPC) clusters equipped with the required high-speed network. Two of them, having 1400 CPU-cores each, are installed in the CERN computing center and managed by the IT department. They are used for heavy simulation studies across the Accelerator and Technology Sector at CERN. The third one, having 800 CPU-cores, has been setup at INFN-CNAF in Bologna (Italy) and is fully dedicated to accelerator physics studies for the HL-LHC and LIU (LHC Injectors Upgrade) projects.

These large computing resources allowed the beam physics team to investigate several features of e-cloud driven instabilities, like the dependence on different beam parameters and machine settings, as well as the effectiveness of different strategies to suppress the unstable motion.

One important feature that is predicted by the simulations is that the increase of the bunch population planned for the HL-LHC can have a beneficial effect on certain kinds of instabilities (those of the “single-bunch” type). This behavior is a result of the non-linear dependence of the e-cloud buildup on the bunch charge and could be confirmed experimentally in the LHC during tests with short bunch-trains at the end of 2018.

Transverse oscillations induced by the e-cloud on a proton bunch (PyECLOUD-PyHEADTAIL simulation).

The next challenge faced by the beam physics team is the simulation of “incoherent effects”, through which e-clouds can degrade the quality of the proton beams, even when instabilities are fully suppressed. In this case, even if the e-cloud does not trigger a collective oscillation of the beam, it can still destabilize the motion of individual beam particles. This can result in a slow but continuous loss of particles visible over long timescales (in the order of hours). The modeling of these effect poses different problems compared to the collective instability case. Their simulation requires the exploitation of a different kind of computing hardware, the Graphics Processing Units (GPUs). For this purpose, dedicated software tools are presently being developed. First test simulations of this kind are now being carried out using GPUs that have been recently made available at INFN-CNAF and at CERN.